By: David Mor-Ofek

As AI-driven systems become more complex, an AI bill of materials (AI/ML BOM) can help shed light on their components and data sources. This article dives into the evolution and benefits of AI/ML BOMs, specifically from a risk management perspective, and investigates the difficulties of implementing AI/ML BOMs without adding to engineering processes.

In this article, I’ll cover the below topics:

- The Concept Behind AI/ML BOM

- Generating AI/ML BOM

- Validating AI/ML BOM

- Leveraging GenAI for Automated BOM & Vulnerability Management

There are different initiatives in building a scheme to catalog security-relevant information about AI in the form of an AI bill of materials (AI/ML BOM). This concept may help standardize the tracking of safety, alignment, licenses, and other security-relevant pieces of information to facilitate a harmonious integration of AI into the world of cybersecurity.

Introduction to BOM

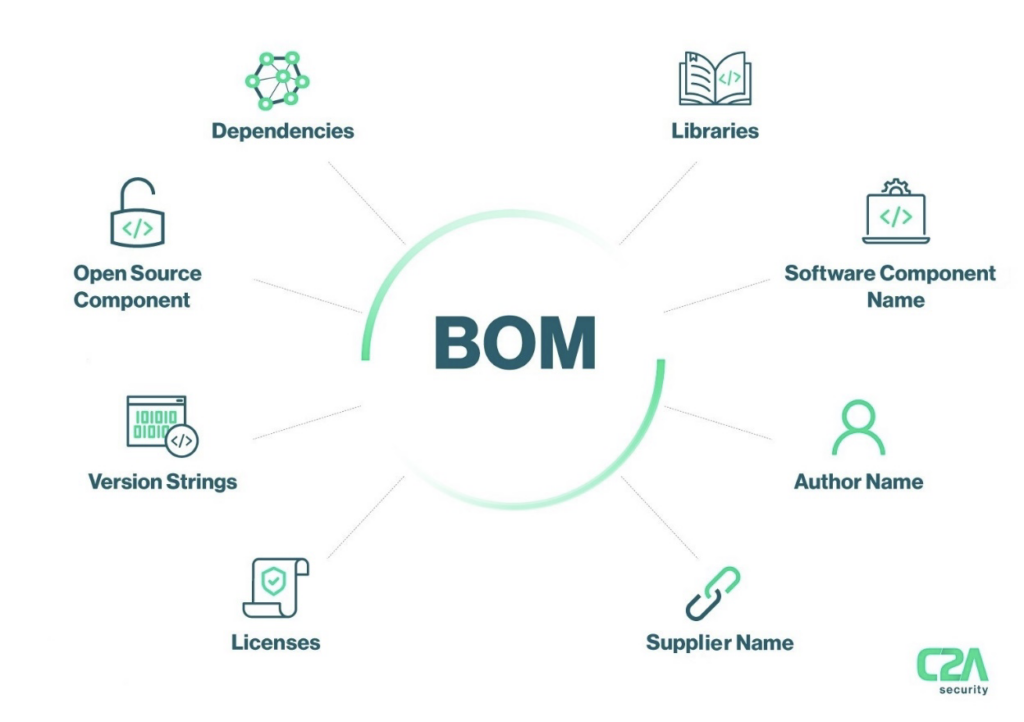

Automotive manufacturers often buy software packages and products from 3rd-party suppliers, Tier 1s, and subcontractors worldwide. Managing these packages with their different components is crucial. That’s where BOM (bill of materials) comes in. The BOM tracks the origin of each library and provides details on every software component in a vehicle. Scanning these libraries reveals the entire infrastructure and helps prioritize fixes.

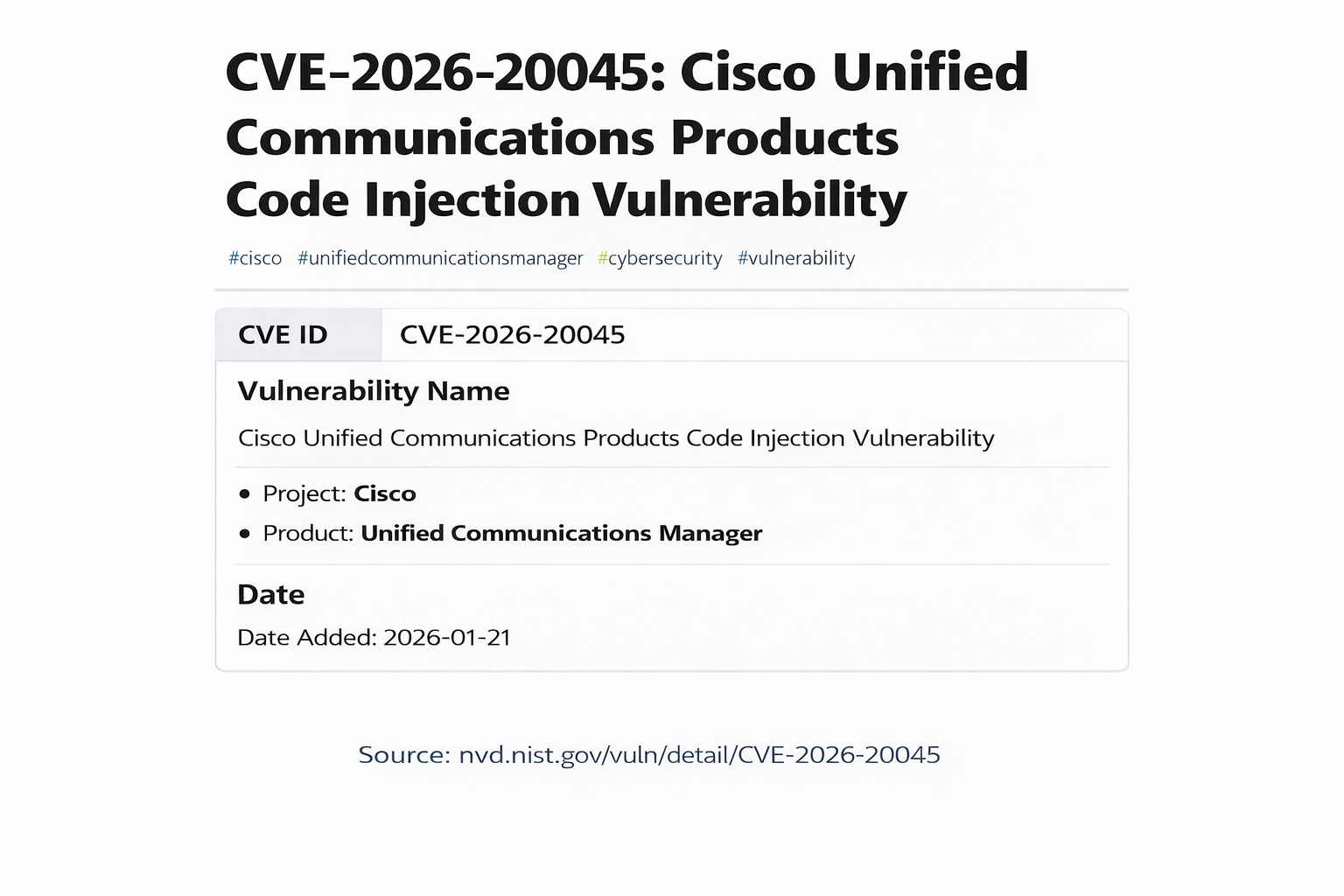

Understanding BOM inventories provides several advantages, including cost savings, reduced security risks, and improved compliance. It assists developers in managing dependencies and identifying security issues. When an inventory contains CVEs (common vulnerability exposures), a BOM can help identify the harmful code and its location.

The SolarWinds hack in 2020 is a great example that emphasizes the importance of BOMs (software bill of materials). Russian hackers injected malware into SolarWinds’ popular tool, Orion, through software updates. Malware spreads widely when customers install these infected updates. If SolarWinds had used BOM, they could have detected the cyberattack earlier because it would have revealed the tainted component.

This cyberattack demonstrated the value of transparency in software supply chains. A poor understanding of program components allows hackers to exploit vulnerabilities discreetly. In response to SolarWinds, President Biden issued Executive Order 14028 in May 2021, requiring government technology providers to disclose BOMs. The National Institute of Standards and Technology (NIST) updated its Guidance on Supply Chain Security, specifically on the critical discipline of Cybersecurity Supply Chain Risk Management (C-SCRM).

The Concept Behind AI/ML BOM

The idea behind an AI (artificial intelligence) / ML (machine learning) BOM is that as AI-driven systems advance and gain influence, it becomes essential to provide transparency into not only the code that powers them but also the data used in their training and development.

Given that AI technology relies heavily on massive amounts of data to train models on intricate patterns and relationships, the purpose of an AI/ML BOM is to document essential dataset information such as origins, contents, preprocessing procedures, and other relevant details.

Model Details

Information that describes basic metadata and details about a given model can include:

- Model Name: the name of the model.

- Model Type: the type of model, such as “text generation” or “image processing”

- Model Version & Licenses: the version of the model and any licenses associated with the model’s usage.

- Software Dependencies: any libraries the model relies on.

- Source and References: a link to where the model can be accessed (URL to the model) and any related documentation associated with the model or its developers or maintainers.

- Attestations: digital signatures for either or both the model itself and the AIBOM to ensure authenticity and integrity.

- Model Developer: the name of the individual organization that developed the model.

Model Architecture

Information about how the model was built and tested can include:

- Parent Model: the name, version, and source of the parent model that the current model is based on.

- Base Model: the name, version, and source of the base (or “foundation”) model on which the current model is based.

- Model Architecture and Architecture Family: the name of the architecture & architecture family.

- Hardware and Software: information about the hardware & software used to run or train the model.

- Required Software Download: whether the model requires the installation of other software libraries to execute.

- Datasets: the names, versions, and links to the source of the datasets used to train the model, as well as their proper usage (such as licensing).

- Input and Output: the input & output the model expects, such as text or images.

Model Usage

The model developer’s guidance:

- Intended usage: how the model should and was designed to be used.

- Misuse or malicious use: examples of usage that constitute improper use of the model.

- Out-of-scope usage: use cases not part of the model’s intended scope.

Model Considerations

Considerations and other information about model limitations:

- Ethical considerations: guidance or information about how the model developer accounted for and mitigated potential ethical concerns.

- Environmental impact: information about the environmental impact of training and using this model, such as its carbon footprint of CO2 emissions.

The Need for Automated Risk Management with AI-generated Code

Implementing an AI/ML BOM makes logical sense from a risk management standpoint because it provides visibility into the complex data and components, dealing with AI-generated code, but simultaneously complicates the processes engineers already manage.

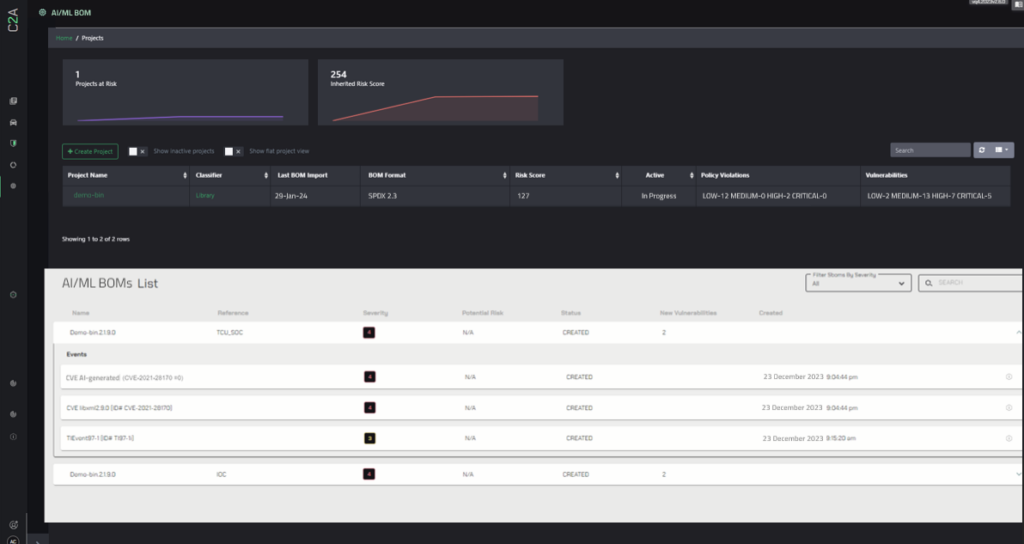

To make the AI/ML BOM work, effective processes must be automated and streamlined, leveraging C2A Security’s EVSec and its BOM & Vulnerability Management, along with EVSec Generative AI Infrastructure Layer. C2A Security’s pioneering approach to Generative AI fortifies EVSec’s risk-driven DevSecOps platform, enabling swift risk identification and proactive vulnerability management, including AI-generated code.

Leveraging GenAI for Automated BOM & Vulnerability Management

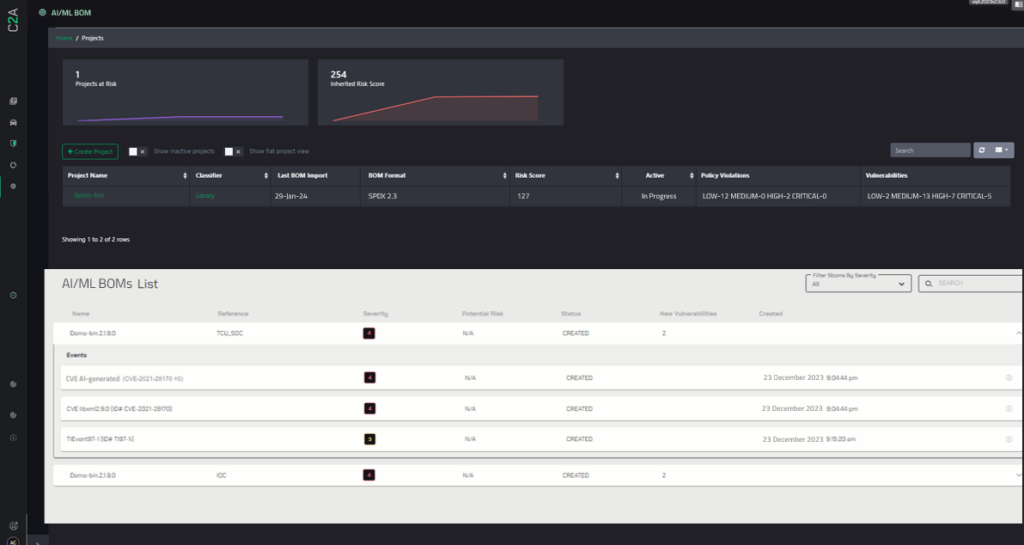

EVSec BOM management, analysis, and scanning capabilities, allow for automated BOM validation, including dependencies, licenses, and weakness analysis, ensuring the overall coherence required. EVSec can manage vulnerabilities related to AI/ML BOM components, providing visibility and management of the vulnerable components based on their true risk impact.

Key Takeaways

1. EVSec BOM and Vulnerability Management product reduces the effort and cost of identifying the impact of a vulnerability, to identify, prioritize, and offer mitigation for new vulnerabilities.

2. EVSec’s AutoSynth GenAI layer is the underlying technology for multiple risk-driven automations including data validation and automatic Cyber Model mapping of the AI/ML BOM.

3. The AutoSynth layer presents an innovative and automated approach to deep dive into the impact and potential mitigations of threats, thus minimizing the effort and time compared to traditional threat intelligence done by multiple analysts and basic vulnerability management.